DURAN | Cognitive Sovereignty | How Understanding MKULTRA's Subproject 68 Could Be The Key To Cognitive Security

Executive Summary

This white paper examines the evolution of psychological influence capabilities from Cold War psychiatry to 21st‑century AI ecosystems. It begins with a historical account of Donald Ewen Cameron’s MKULTRA‑funded experiments in “psychic driving,” then traces conceptual continuities between his attempts to rewrite mental patterns and modern systems that algorithmically shape attention, belief, and emotion at societal scale.

Crucially, this paper reframes the discussion toward cognitive security. The central argument is not that governments should revive MKULTRA‑style coercive methods. Instead, it is this:

If democratic institutions fail to regulate, secure, and ethically manage psychological influence environments, then private actors, foreign adversaries, and unaccountable AI platforms will define the cognitive reality in which citizens think.

The United States—like all modern democracies—faces the emergence of a new kind of national vulnerability: the unprotected mind. Without proactive standards, transparency requirements, and defensive cognitive infrastructure, citizens become exposed to influence operations that neither they nor their government can see or control.

This paper proposes a strategic doctrine of Cognitive Sovereignty and outlines the need for a National Cognitive Defense Framework to protect the public’s ability to think clearly, freely, and safely in an age of ubiquitous digital influence.

Table of Contents

Introduction From Psychic Driving to Cognitive Sovereignty – Why This Paper Matters Now

Historical Foundations – Donald Ewen Cameron and MKULTRA State Psychology and the Origin of Institutionalized Cognitive Engineering

Psychic Driving – Theoretical Aims and Ethical Collapse Breaking Down and Rebuilding the Mind: Theory, Application, Fallout

From Analog Coercion to Digital Entrainment How Surveillance Capitalism Revived and Scaled MKULTRA’s Core Methods

Algorithmic Influence as Psychic Driving at Scale Social Media, Repetition, and Digital Behavior Conditioning in the Wild

The Post-Trump Expansion of the Private Intelligence Industry Decentralized PsyOps, Narrative-for-Hire, and the Strategic Collapse of Oversight

Legal Infrastructure for Domestic Narrative Manipulation – The Obama-Era Revisions The Smith-Mundt Modernization Act, CVE, and the Return of Legalized Influence Ops

Synthetic Reality Architectures and the Erosion of Shared Truth From Personalized Feeds to Engineered Consensus: The Death of Objective Reality

A Framework for Cognitive Sovereignty in the Age of Digital Entrainment Five Strategic Pillars to Defend Mental Autonomy and Rebuild Epistemic Integrity

Conclusions and Strategic Recommendations Mental Autonomy Is the Final Frontier – The Time to Act Is Now

1. Introduction

Psychic Driving, Indoctrination, and the Struggle for Cognitive Power

At its most fundamental level, psychological influence is about shaping belief through exposure, repetition, and controlled disruption of existing thought patterns. This core dynamic—first crudely explored through the unethical experiments of mid‑20th century psychiatry—has matured into a strategic tool employed by governments, militaries, and increasingly, private corporations and foreign adversaries. Understanding how this works—and who controls it—is essential to any 21st‑century security doctrine.

In the 1950s and 60s, psychiatrist Donald Ewen Cameron and the CIA’s MKULTRA program pursued a radical idea: that the mind could be erased and rebuilt using coercive techniques like sensory deprivation, drug-induced states, and repeated audio messaging—a process he called psychic driving. The scientific foundations were unsound, and the results were devastating, but the effort illuminated something crucial: that under extreme conditions, human cognition becomes vulnerable, plastic, and programmable.

This same dynamic is echoed—ethically and systematically—in one of the most common and socially accepted forms of institutional influence: military basic training. In the armed forces, new recruits are subjected to a controlled breakdown and rebuild process: intense physical stress, deprivation of sleep and comfort, uniformity of dress and language, repetition of slogans, and constant reinforcement of hierarchical structures. The goal is not to destroy identity, but to strip away civilian individualism and reconstruct a new identity: that of the soldier.

Both psychic driving and military indoctrination rely on a two-stage psychological model:

Disruption or depatterning (through stress, confusion, repetition, or deprivation)

Reconstruction through controlled exposure to new norms, values, and narratives

The military controls this process tightly and ethically (at least by institutional standards), within a sovereign framework designed for national defense and cohesion. But today, the same principles can be—and are—replicated by foreign influence operations, AI-powered media platforms, and private intelligence contractors, operating far outside the realm of democratic oversight.

This marks the core threat of the 21st-century cognitive battlespace:

The power to influence identity, behavior, and belief no longer requires command of a military, control over education, or access to classified technologies. It requires only data, algorithms, and attention.

Where Cameron used sedation and tape recorders, today's actors use social media feeds, content recommendation engines, emotional data profiling, and automated engagement loops. Where militaries once had a monopoly on structured identity formation, any actor with technological sophistication and psychological insight can now replicate these dynamics at scale—without accountability.

This transformation collapses the boundary between psychological warfare and consumer manipulation, between institutional indoctrination and algorithmic entrainment. As a result, citizens today are exposed to continuous low-intensity psychic driving—not in padded rooms, but in digital environments optimized to hijack emotion, repetition, and belief formation.

In this context, cognitive sovereignty—the ability of individuals and societies to protect their mental autonomy—becomes a national security imperative. This white paper argues that if democratic governments do not establish transparent, ethical, and enforceable boundaries around psychological influence operations, they will cede the architecture of belief formation to adversarial states, unregulated platforms, or profit-driven psychological warfare firms.

The question is no longer whether psychological influence is occurring. It is whether it will be governed by public institutions accountable to the people, or left in the hands of foreign actors and private enterprises accountable to no one.

2. Historical Foundations: Donald Ewen Cameron and MKULTRA

Early Cognitive Engineering, State Secrecy, and the First Psychological Frontier

When evaluating the emergence of modern psychological influence systems, it is tempting to view the CIA’s MKULTRA project as a bizarre relic of Cold War paranoia. But this interpretation misses the true historical value—and warning—of MKULTRA and its most infamous collaborator, Dr. Donald Ewen Cameron. These experiments were not merely unethical—they were prototypes of a mindset: that human cognition could be systematically broken down, reshaped, and deployed toward strategic ends.

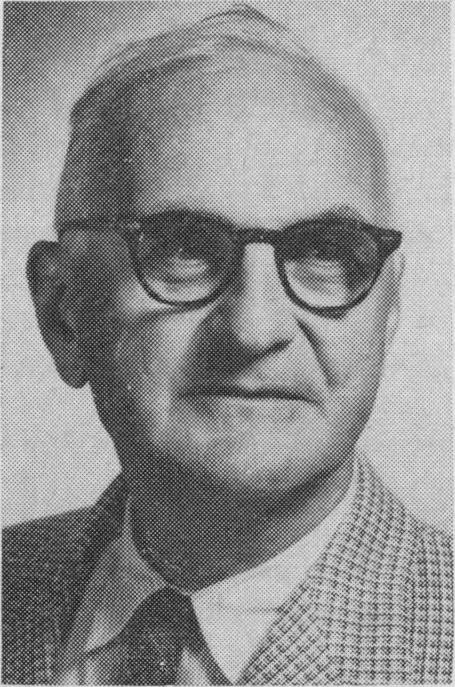

Donald Ewen Cameron was not an outsider. He was among the most elite psychiatric authorities in the West: President of the American, Canadian, and World Psychiatric Associations, and director of the Allan Memorial Institute at McGill University in Montreal. Far from operating in obscurity, he stood at the center of postwar psychological authority. When the CIA approached Cameron with covert funding for research under Subproject 68 of MKULTRA, it was not recruiting a rogue agent—it was enlisting the full institutional weight of North American psychiatry into the service of cognitive manipulation.

Cameron’s experiments were based on a two-stage theory of psychological intervention:

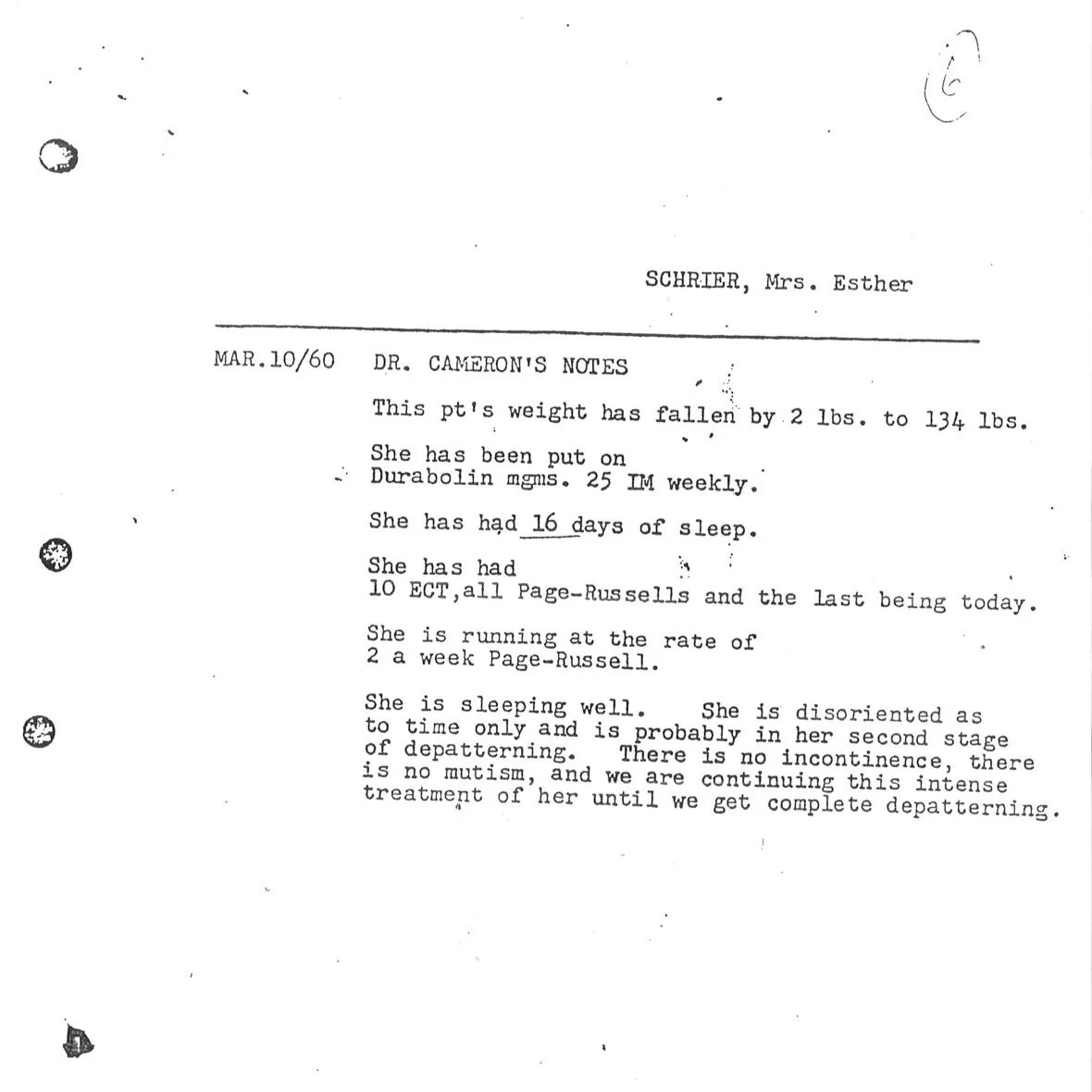

Depatterning – the deliberate collapse of a subject’s mental structure through massive doses of electroshock therapy, prolonged chemically induced comas, sensory deprivation, and experimental drug cocktails like LSD.

Repatterning (Psychic Driving) – the attempt to rebuild the subject’s mind through continuous, repetitive messaging—sometimes over hundreds of thousands of recorded iterations, played to patients in a sedated or dissociated state.

While these methods were later exposed as scientifically invalid and ethically indefensible, their structural logic anticipated the techniques later used in military basic training: deconstruct the individual’s existing identity, then replace it with a new, functional one. The difference is that military indoctrination is bounded by law, purpose, and national sovereignty. MKULTRA was covert, coercive, and unaccountable.

This is what makes MKULTRA historically important—not just its abuses, but its foresight. It demonstrated that:

States were willing to invest in programmable cognition as a tool of national security

Elite institutions could be recruited into secret psychological warfare programs

The line between therapeutic science and ideological engineering could be easily crossed

The mind itself could be treated as a strategic substrate—subject to manipulation, optimization, and control

The Church Committee investigations of the 1970s ultimately exposed MKULTRA, sparking public outrage and limited reforms. But the strategic appetite it revealed—to control not just bodies or borders, but beliefs and identity structures—never disappeared. Instead, it evolved. And with the explosion of behavioral data, AI-powered targeting, and privatized intelligence services in the 21st century, the same logic that animated MKULTRA has now escaped its original custodians.

Today, psychic driving no longer requires CIA labs or classified budgets. It can be reproduced at scale by social media companies optimizing content for engagement, or by foreign adversaries microtargeting voters with psychographic messaging, or by private contractors running digital influence ops with no legal constraints.

Cameron’s work is thus not just a dark chapter—it is the blueprint for a new strategic category: cognitive warfare, defined by the collapse of individual psychological resistance and the systemic construction of belief via engineered environments.

The original lesson of MKULTRA is not that governments are always the greatest threat to the mind. The deeper truth is more destabilizing:

Once the techniques of cognitive manipulation are developed, they do not stay in the lab. They migrate. They decentralize. And unless explicitly constrained, they reemerge in forms more efficient, more scalable, and less accountable than their originators ever imagined.

3. Psychic Driving: Theoretical Aims and Ethical Collapse

From Mental Erasure to Synthetic Reality Engineering

The method known as psychic driving was conceived by Dr. Donald Ewen Cameron as a revolutionary psychiatric intervention—one that would break down and rebuild the human mind. Though Cameron’s methods have since been discredited, and condemned as abusive and unscientific, the underlying logic he operated from—that the mind could be deconstructed, made vulnerable, and reprogrammed through repetition and controlled exposure—remains chillingly relevant today.

Psychic driving was based on a two-phase model of psychological overwrite:

Depatterning: Collapse the patient’s cognitive coherence through high-intensity electroconvulsive therapy, drug-induced comas, isolation, and sensory disruption. This stage sought to reduce the subject to a mentally malleable state—a psychological “tabula rasa.”

Repatterning: Once the subject was rendered passive, Cameron subjected them to endless repetition of recorded messages, often delivered while sedated, in isolation, or under conditions of mental confusion. These messages were not neutral—they were prescriptive, often designed to install specific behaviors or attitudes, such as emotional dependence or compliance.

The goal was not simply treatment—it was mental reconstruction, an act of psychological authorship by the clinician over the identity of the subject. This was not therapy. It was programming.

The Ethical and Scientific Collapse

Cameron’s practices quickly unraveled under scrutiny. His methods lacked any empirical basis in neuroscience, psychology, or even clinical results. Patients subjected to psychic driving often emerged with severe cognitive damage, emotional trauma, memory loss, and long-term psychological dysfunction. Many were unaware they were even part of an experimental program.

From today’s standpoint, psychic driving is remembered as a case study in the abuse of institutional power, the danger of pseudoscientific ambition, and the fragility of informed consent in contexts of authority. It represents a total ethical collapse, not just by Cameron, but by the institutions that enabled him—including the CIA, McGill University, and the North American psychiatric establishment.

And yet, despite its failure as science, psychic driving remains crucial—not as a technique, but as a precursor of an idea:

That minds can be reshaped through immersion, disruption, and carefully repeated narrative exposure.

Psychic Driving Reimagined by Machines

What Cameron attempted with drugs, tape loops, and sensory deprivation can now be reproduced—more precisely, more invisibly, and more effectively—by AI-powered content ecosystems, behavioral surveillance systems, and synthetic digital agents. Today, depatterning no longer requires sedation. It can be achieved through information overload, emotional exhaustion, and algorithmic confusion. Repatterning no longer requires a psychologist in a white coat—it is automated, real-time, and adaptive.

But the most dangerous evolution is not just the precision of modern influence—it is the creation of fully synthetic cognitive environments, constructed entirely by machines.

AI can now generate:

Tailored social media feeds filled with artificially amplified opinions

Fake peer communities composed of bot accounts or synthetic identities

Simulated influencers or relationships designed to earn emotional trust

Hyper-personalized content loops based on predictive emotional modeling

In such environments, individuals can be immersed in a reality that has been entirely engineered for them, populated by agents and content designed to reinforce a specific narrative, ideology, behavior, or belief system—without any indication that what they’re experiencing is fabricated.

This is psychic driving at the next level: not repeated audio loops in a hospital bed, but an entire digital life curated by invisible systems, designed to bypass critical thinking, isolate the subject from alternative views, and reorient identity without confrontation or awareness.

Unlike Cameron’s patients, today's subjects never know it is happening.

From Psychological Manipulation to Environmental Control

The transition from MKULTRA-style psychic driving to modern influence systems represents a shift in tactics—from direct control of the subject, to indirect control of the subject’s world.

This is a subtle but profound transformation:

Where Cameron sought to invade the mind, AI systems now construct the environment that the mind interprets as real.

Where psychic driving used brute force, modern systems use immersive persuasion.

Where the hospital room was the containment space, today’s containment space is the algorithmically-filtered internet itself.

Such systems don’t require overt deception or coercion. They simply control what is shown, repeated, and excluded—leveraging the same core principle as psychic driving: exposure shapes cognition.

In this way, modern influence technologies have resurrected the original premise of MKULTRA—that identity is modifiable and narrative exposure is the mechanism of control—but without needing to violate physical autonomy. They operate with consent, or rather, the illusion of it.

The Strategic Implication

The danger is not just that individuals can be manipulated. It is that entire populations can be placed inside environments where narrative exposure is algorithmically designed, and where critical agency is neutralized by convenience, emotional resonance, and invisibly curated consensus.

These environments can be shaped by:

Foreign state actors seeking political instability

Private intelligence firms executing narrative warfare for hire

Corporate platforms maximizing attention and revenue at any ethical cost

Autonomous AI systems optimizing for engagement without understanding their psychological impact

Unless explicitly regulated and publicly governed, these systems will evolve not toward truth, but toward behavioral efficiency and strategic dominance.

Cameron’s psychic driving may have failed in its time—but the idea behind it now lives on, not in the hospital ward, but in every device, every feed, and every algorithmic filter that separates citizens from the raw reality of the world.

And if we do not act, the next era of influence will not be about propaganda. It will be about total synthetic immersion—engineered not to break the mind, but to shape it invisibly, continuously, and completely.

4. From Analog Coercion to Digital Entrainment

The Evolution of Influence from Physical Compulsion to Algorithmic Conditioning

The strategic manipulation of human cognition has not disappeared since the era of MKULTRA—it has been refined, routinized, and rendered frictionless. Where Cold War-era programs like psychic driving required chemical sedation, physical isolation, and direct institutional control, contemporary systems achieve similar effects through immersive behavioral environments, designed by machine learning systems and behavioral economists, and optimized for continuous exposure rather than episodic trauma.

This transformation marks the progression from analog coercion—a form of overt psychological disruption enforced by state authority—to digital entrainment, a process by which human attention, belief, and behavior are subtly conditioned by algorithmic systems operating below the threshold of conscious awareness.

Defining Digital Entrainment

In neuroscience and behavioral psychology, entrainment refers to the gradual synchronization of cognitive or physiological processes with external stimuli. In digital environments, entrainment occurs when users’ attention cycles, emotional states, and belief structures become aligned with the logic of the systems they interact with.

Digital entrainment can thus be understood as the continuous conditioning of human behavior through adaptive exposure to personalized, emotionally resonant, and repetition-based content systems—engineered by AI to maximize engagement, often at the expense of agency.

Where psychic driving once used crude repetition—tape loops and drug-induced passivity—modern platforms use behavioral surveillance, real-time feedback optimization, and emotional data mining to reinforce belief, steer behavior, and shape identity. The psychological processes underlying this are well-established in the literature: Hebbian learning, affective priming, social validation, and cognitive bias reinforcement. The result is a user who is not coerced into belief, but rather gently immersed in it.

From Interrogation to Immersion

In the 20th century, psychological control was conducted in controlled environments—interrogation rooms, psychiatric wards, military barracks—spaces of explicit authority. Today, it occurs in open digital fields, where influence is seamless, constant, and often indistinguishable from entertainment.

Military indoctrination, particularly in basic training, remains one of the clearest non-coercive applications of depatterning and reprogramming. New recruits undergo psychological compression: deprivation, uniformity, repetition, ideological reinforcement, and group cohesion. They emerge with a new identity: one optimized for command, obedience, and unity.

This comparison matters because the same pattern—disruption followed by narrative reinforcement—is now enacted invisibly in the civilian population. But unlike the military, the digital equivalent requires no consent, no command structure, and no exit. There is no boot camp—only the feed.

Entrainment occurs continuously. The user is shaped not through orders, but through repetition. Not through commands, but through curation. Not by a drill sergeant, but by an algorithm—calibrated to their deepest cognitive reflexes.

Understanding the Threat Landscape

The threat of digital entrainment is not monolithic. It emerges in multiple forms, driven by different actors with divergent goals.

In one form, entrainment is emergent and profit-driven—platforms design systems to maximize user engagement, and in doing so, train users to respond to emotional content, polarized headlines, and behavior-reinforcing stimuli. There is no political agenda—only a behavioral one.

In another form, entrainment is deliberate and strategic. Adversarial state actors have used social media platforms to inject memetic content, simulate ideological communities, and cultivate division. These tactics—documented by the Stanford Internet Observatory and others—have been deployed in multiple countries, including the U.S., to exploit psychological vulnerabilities at scale.

And in a third form, psychological entrainment is outsourced. Private intelligence firms, political data consultancies, and algorithmic influence contractors offer services previously reserved for governments. Their methods include psychographic targeting, AI-guided narrative warfare, and synthetic engagement ecosystems.

In each case, the architecture is similar: large-scale behavioral data acquisition, AI optimization of exposure, emotional resonance mapping, and algorithmically structured repetition. Whether deployed for profit, power, or disruption, the result is the same: a user whose beliefs and identity are shaped without consent or recognition.

Evidence of Entrainment in Practice

Empirical research confirms the psychological effects of curated digital exposure. A landmark 2018 study by MIT found that false stories on Twitter were 70% more likely to be retweeted than true ones—primarily due to their novelty and emotional valence. In 2012, Facebook researchers demonstrated that algorithmic manipulation of news feed content could significantly alter user mood, showing that emotional contagion could be triggered by platform logic alone.

Further work by Pew Research and academic institutions has documented increased belief polarization, narrowed worldviews, and identity hardening as outcomes of long-term exposure to algorithmically sorted content. These effects are not accidental—they are design outputs of systems that optimize for emotional intensity, ideological reinforcement, and time-on-platform.

Entrainment, then, is not a conspiracy. It is a business model, a foreign policy tool, and a governance challenge rolled into one.

From Influence to Environmental Control

The most dangerous evolution of modern psychological systems is not that they influence thought—it is that they construct the environment in which thought occurs. Entrainment is no longer about influencing individual decisions, but about shaping the world as it is presented to the user.

These environments can be populated by:

AI-generated influencers tailored to user profiles

Fake social consensus simulated by bots or click farms

Emotionally tuned content loops optimized by reinforcement learning

Narrative suppression algorithms that algorithmically erase dissent

In such systems, users can be immersed in synthetic reality environments—digital worlds that appear organic but are entirely constructed for influence. This is no longer exposure to biased information. This is life inside a belief system designed by external entities.

There is no need to erase a person’s memories or beliefs. One simply needs to control what they see, what they hear, and whom they think agrees with them. Over time, that becomes identity.

Frictionless Influence as the Norm

Unlike MKULTRA, there is no need for drugs, electrodes, or confinement. Today’s psychic driving is opt-in. The user chooses it daily. The most insidious feature of digital entrainment is its ordinariness.

That is what makes it so dangerous.

This is not mind control. It is mind environment control—the ability to structure what feels normal, believable, popular, and true. The power to shape perception now lies not with the state, but with platforms and actors that may be foreign, adversarial, automated, or simply indifferent to the consequences of psychological harm.

The result is a collapse in cognitive sovereignty—not because belief is coerced, but because users no longer know where their beliefs came from.

Unless democratic societies build mechanisms to detect, regulate, and counter digital entrainment, they will be unable to defend the integrity of individual or collective cognition. The challenge is not to eliminate influence—it is to govern the architectures of exposure that now shape what billions of people believe.

5. Algorithmic Influence as “Psychic Driving at Scale”

How Machine-Led Environments Achieve What Analog Experiments Could Not

What Donald Ewen Cameron attempted to accomplish through brute-force psychiatric experimentation—breaking down and reprogramming the human mind—is now being achieved, more subtly and more effectively, through AI-driven information ecosystems. Cameron’s psychic driving was crude, invasive, and ultimately discredited. Today’s systems are sophisticated, seamless, and deployed at planetary scale. But their underlying logic remains remarkably similar: the belief that identity, emotion, and behavior can be reshaped through engineered repetition and narrative saturation.

The modern evolution of psychic driving no longer requires force. It requires frictionless immersion—a state in which a user is surrounded by tailored content, repetitive cues, and emotionally charged signals designed to train attention, reinforce belief, and alter behavior through constant, adaptive exposure.

Psychic Driving, Digitized

At its core, Cameron’s psychic driving relied on three mechanisms:

Disruption of normal cognitive patterns through sedation, electroshock, and sensory deprivation.

Repetitive messaging designed to overwrite existing belief structures.

Enclosure within a sealed perceptual environment—the padded room, the coma, the looped tape—cut off from conflicting input.

In the digital age, these mechanisms have been reengineered into automated systems that:

Induce cognitive fatigue via information overload, outrage cycles, and scrolling addiction

Repeat emotionally charged messages tailored to the user’s psychometric profile

Enclose users within algorithmic filter bubbles, where disconfirmation is suppressed and narrative alignment is rewarded

The difference is no longer one of technique—it is one of scale, subtlety, and speed. What was once a laboratory procedure conducted on dozens of subjects is now a global infrastructure affecting billions of users daily.

Repetition and Narrative Saturation as Tools of Belief Engineering

Contemporary research in cognitive psychology confirms that repetition breeds belief. The illusory truth effect, for instance, shows that people are more likely to believe a false statement if they hear it multiple times—even if they initially know it to be untrue. Modern algorithmic systems exploit this by:

Prioritizing content with high engagement, regardless of accuracy

Recirculating emotionally resonant messages to the same users repeatedly

Suppressing content that disrupts user expectations or causes cognitive dissonance

Reinforcing belief cohesion through social validation cues ("likes", shares, trending tags)

This is not passive exposure—it is belief conditioning, tuned in real time by machine-learning systems whose only goal is to increase behavioral efficiency: clicks, time-on-platform, ideological commitment, or conversion to a cause.

The algorithm does not care what you believe. It only cares that you believe more, faster, and in ways that are measurable.

AI as a Scalable Agent of Influence

Cameron’s psychic driving was limited by human labor: a doctor, a tape, a room. Modern algorithmic influence systems are self-scaling and self-improving. They rely on:

Deep user profiling (psychographics, affective states, inferred vulnerabilities)

Reinforcement learning agents that continuously adapt content to maximize engagement

Language models capable of generating narrative variants for repeated exposure

Network amplification via bots, synthetic accounts, or hijacked influencers

In effect, today’s platforms and influence operations operate as massively parallel psychic driving machines, each user encased in a custom environment, optimized for repetition and emotional impact.

Unlike Cameron, who guessed what his patients needed to hear, modern systems test thousands of variants per second, determine which message performs best, and then scale exposure across millions of targets.

This is not coercion. It is hyper-personalized persuasion without visibility or exit.

The Loss of Friction and the Death of Doubt

In Cameron’s era, psychic driving was terrifying because it was inescapable. In today’s world, what makes algorithmic psychic driving dangerous is its comfort. There is no struggle, no confrontation, no external command. There is only ease, affirmation, and emotional reinforcement.

Over time, this leads to a cognitive condition akin to learned certainty—a state in which the user no longer questions the origin of their beliefs, because the environment has never shown them otherwise. This is not brainwashing in the traditional sense. It is worldbuilding—constructing a synthetic reality whose parameters are designed not for truth, but for reinforcement.

When exposure is controlled, and repetition is tuned to the user’s profile, doubt becomes a design flaw—and so it is eliminated.

The Return of the Sealed Room—Now Invisible

Cameron needed a padded room to isolate his subjects. Today’s sealed rooms are digital and self-contained:

A Twitter thread that only includes reinforcing voices

A YouTube algorithm that routes users from curiosity to extremism in six clicks

A TikTok feed that learns your deepest insecurities and loops content to keep them active

A Discord server filled with AI-generated avatars agreeing with your worldview

This is the AI-enabled recreation of the psychic driving environment—but now invisible, interactive, and self-reinforcing. The user does not resist because the environment does not feel constructed. It feels organic. Natural. Personal.

But it is engineered. And over time, the difference between belief and exposure disappears.

Strategic Implications

The strategic impact of algorithmic psychic driving is enormous:

Adversarial state actors can inject targeted beliefs into populations and watch them take root through reinforcement engines

Private intelligence firms can use AI to simulate support, simulate consensus, or simulate grassroots momentum for political ends

Autonomous systems, once seeded with a behavioral goal, can evolve influence operations that no human designed in full

What was once a state monopoly on psychological manipulation has become a privatized, scalable, and deniable global industry. And unlike MKULTRA, there is no public scandal—because there is no public awareness.

The power to shape minds at scale no longer requires surveillance or torture. It requires only access to the systems that control exposure—and the algorithms that determine what gets repeated.

The future of belief is being decided by repetition curves inside codebases no one elected, no one audits, and few understand.

6. The Post-Trump Expansion of the Private Intelligence Industry

Decentralized Psychological Operations, Political Weaponization, and the Erosion of Democratic Oversight

The infrastructure for large-scale influence, surveillance, and psychological manipulation no longer belongs exclusively to nation-states. In the aftermath of Donald Trump’s first presidential term—particularly from 2016 to 2021—the United States witnessed an unprecedented proliferation of private intelligence firms, data-driven psychological operations, and covert influence architectures. Many of these entities operate in legal grey zones, exploiting gaps in accountability and classification to carry out strategic cognitive operations against both foreign and domestic populations.

This transformation marked a critical inflection point: what was once the purview of state-run agencies such as the CIA or NSA has become a globalized service economy of digital manipulation. In this new ecosystem, influence itself is a product—contracted, purchased, and deployed by political campaigns, corporations, foreign powers, and private billionaires.

From State Monopoly to Privatized PsyOps

The myth of state exclusivity in intelligence work shattered publicly with the revelations around Cambridge Analytica, a private data analytics firm that harvested Facebook user data without consent to build psychographic voter profiles. These profiles were used to microtarget emotional messaging to manipulate political attitudes during the 2016 U.S. election. Investigative reporting by The Guardian and The Observer exposed how these tactics—once reserved for black-budget psyops—had been productized and embedded directly into Western democratic elections. Carole Cadwalladr’s reporting revealed how Cambridge Analytica tested fear-based messages to manipulate behavioral outcomes and that their operations were embedded with the Trump campaign from early stages.

However, Cambridge Analytica was not an isolated scandal—it was a proof of concept for what was to come. In 2016, The New York Times later reported that Psy-Group, an Israeli private intelligence firm staffed with former military operatives, pitched the Trump campaign a portfolio of psychological warfare tools, including online avatar armies, fake news sites, and behavioral influence operations. Though the campaign denied formal collaboration, documents from the Mueller investigation confirmed the pitch and its alignment with active digital tactics used at the time.

Around the same period, Black Cube, another Israeli-linked firm populated by former Mossad agents, was reportedly hired to target Obama-era officials who had negotiated the Iran nuclear deal. They also covertly surveilled and attempted to discredit journalists and victims in the Harvey Weinstein case using deep-cover identities and psychological pressure. These operations involved creating false personas and embedding them into social circles—classic infiltration tactics updated with digital augmentation.

Then came NSO Group, creators of the now-infamous Pegasus spyware. Their product enabled full-spectrum surveillance of smartphones with a single click—or, in newer versions, without any interaction required. According to The Guardian and Amnesty International’s Pegasus Project, this spyware was sold to authoritarian regimes and deployed against journalists, human rights defenders, and political opponents. While NSO claimed it was only sold to vetted governments, multiple investigations revealed its use against U.S. journalists, diplomats, and civil society actors.

What these firms demonstrate collectively is that the architecture of psychological manipulation, once contained within the state, has been fully commercialized. Intelligence-grade tools—avatar armies, sentiment weaponization, synthetic peer groups, geolocation targeting, and emotional contagion strategies—are now available for hire.

The Intelligence Vacuum and the Rise of Influence Mercenaries

From 2016 onward, the breakdown in U.S. institutional cohesion created a vacuum that private actors eagerly filled. Agencies like the Department of Homeland Security and the FBI faced internal demoralization and external political delegitimization. Meanwhile, figures like Michael Flynn, former National Security Advisor, and Steve Bannon, Trump’s chief strategist, openly advocated for the deployment of irregular psychological warfare in civilian spaces. Flynn called for “digital soldiers,” and Bannon built and distributed influence infrastructure through his “War Room” ecosystem, which combined podcasting, data farming, and live narrative shaping.

Political action committees aligned with Trump and right-wing populist groups then adopted psychometric targeting strategies. These operations were successors to the Cambridge Analytica model but were conducted under newer entities and shell contractors, utilizing AI-augmented media targeting that learned from platform engagement to maximize ideological commitment. The New York Times documented how these operations worked to “game the refs” in Silicon Valley, by flooding reporting mechanisms, suppressing counter-narratives, and amplifying emotionally manipulative content.

At the same time, foreign state actors like Russia, China, and Iran adapted quickly, injecting their narratives into these decentralized American networks, exploiting the lack of boundary between foreign intelligence warfare and domestic political communication.

The Narrative-for-Hire Economy

These developments reflect a profound shift in the logic of psychological operations. Nation-states once used psyops for strategic deterrence or regime change. Today, private firms use them for contracts, clients, and political favors. This new economy of influence is governed not by democratic oversight or international law, but by opportunity and plausible deniability.

Unlike traditional spies or agents, these firms are immune to espionage treaties. Their clients include governments, corporations, NGOs, and political campaigns. Their strategies are often indistinguishable from state-level information warfare—but their accountability mechanisms are nonexistent.

Importantly, they now integrate AI-generated content, synthetic avatars, and emotional resonance feedback loops, drawing from the same behavioral architecture described in earlier sections of this paper. They do not merely simulate consent—they simulate reality itself.

The result is a form of psychic driving without fingerprints. No electrodes. No padded room. Just feeds, repetition, and emotional saturation, deployed by entities that do not officially exist.

Strategic Consequences for Cognitive Sovereignty

This privatization of narrative warfare is a direct threat to cognitive sovereignty—the ability of a population to form beliefs and identities without manipulation by foreign agents, corporate interests, or political operatives operating in disguise.

Without strong legal oversight, digital transparency, and a national doctrine on cognitive rights, democratic societies will not be able to distinguish between authentic belief and constructed behavior. The informational environment itself becomes weaponized. In this condition, elections are no longer exercises in deliberative democracy—they are influence contests managed by narrative engineers, not citizens.

Left unchecked, this influence economy will continue to hollow out the public sphere, transforming the citizen into a target profile, and democracy into a managed simulation.

7. Legal Infrastructure for Domestic Narrative Manipulation – The Obama-Era Revisions

How Post-9/11 Statutes and Executive Actions Enabled State-Sanctioned Perception Management at Home

The United States once maintained a clear legal firewall between psychological operations abroad and information activity directed at its own population. This boundary was codified in long-standing regulations like the Smith-Mundt Act of 1948, which explicitly prohibited the U.S. government from propagandizing its own citizens using the same tactics it deployed abroad. But by the second term of the Obama administration, this legal distinction was quietly dismantled.

Amid rapid technological change, evolving global threats, and the rise of decentralized media, the federal government began expanding its informational authorities—not just for foreign disinformation defense, but also to shape domestic narratives under the auspices of resilience, cybersecurity, and counter-extremism.

The result was a transformation of the domestic information landscape, not through overt propaganda, but through a bureaucratically sanitized reintegration of psychological operations into national security strategy—blurring the lines between defense, persuasion, and manipulation.

The Smith-Mundt Modernization Act of 2012

The most pivotal moment in this transition came with the passage of the Smith-Mundt Modernization Act, buried within the 2013 National Defense Authorization Act (NDAA). This amendment effectively repealed key restrictions on domestic dissemination of government-produced media content.

The original Smith-Mundt Act (1948) forbade the U.S. State Department and the Broadcasting Board of Governors (now the U.S. Agency for Global Media) from deploying propaganda intended for foreign audiences on U.S. citizens. This law recognized the dangers of state-controlled messaging in domestic political life.

But under the modernization amendment—introduced by Rep. Mac Thornberry (R-TX) and Rep. Adam Smith (D-WA)—that firewall was removed. The change was framed as a modernization for “public diplomacy in the age of social media,” allowing content produced for international audiences to be accessed by Americans online.

In practice, it enabled U.S. agencies to bypass prior constraints and redeploy psychological influence assets within the domestic media ecosystem, provided the messaging was framed as defense against foreign influence or part of “strategic communications.”

The Rise of Countering Violent Extremism (CVE) and Information Management

During the Obama administration, the Countering Violent Extremism (CVE) initiative was developed to identify and mitigate the roots of radicalization, particularly among Muslim-American youth. While initially focused on community engagement and education, CVE quickly became a vector for behavioral surveillance, pre-crime analytics, and narrative shaping.

The program often relied on data-driven risk modeling, targeted interventions based on psychometric profiling, and cooperation with social media platforms to shape “positive messaging” narratives. Although billed as a counterterrorism program, CVE laid the groundwork for domestic influence architecture that could map belief systems, identify ideological deviants, and proactively engineer exposure environments.

These early interventions normalized the idea that U.S. agencies could influence domestic belief ecosystems preemptively, outside of judicial processes or clear public oversight. The operational logic of CVE—prevent harm by managing thought vectors—would later echo in disinformation response frameworks, election influence monitoring, and pandemic-era narrative enforcement.

Fusion Centers and Behavioral Surveillance Infrastructure

The expansion of Fusion Centers—joint intelligence-sharing hubs between federal, state, and local authorities—also contributed to the drift toward domestic psyops. Originally developed after 9/11 to share counterterrorism intelligence, these centers evolved into real-time data aggregators, compiling social media posts, behavioral risk indicators, and even psychological profiling data.

By 2015, Fusion Centers operated across all fifty states and D.C., working with the Department of Homeland Security to monitor “emerging threats”—a term elastic enough to include protest movements, dissident speech, or ideological nonconformity.

Though they were not formally psyops centers, their ability to tag, track, and redirect belief actors through data coordination made them de facto components of domestic influence capability. Few legal barriers existed to prevent this mission creep.

Narrative Containment via Tech Partnerships

Parallel to these legal changes, the Obama administration significantly expanded government collaboration with social media and digital platforms, forming early iterations of what would become “public-private influence ecosystems.”

Agencies like DHS, the State Department, and the National Counterterrorism Center worked directly with Facebook, Twitter, YouTube, and Google to identify extremist content, flag dangerous narratives, and promote counter-messaging. In many cases, this involved preemptive suppression of content that had not yet broken laws, but was deemed ideologically hazardous.

This collaboration was later institutionalized through initiatives like the Global Engagement Center (GEC), created in 2016 to coordinate U.S. messaging against disinformation and terrorism. Although originally chartered to target foreign actors, the GEC and associated initiatives routinely intersected with domestic perception management—especially when narratives became cross-border or when disinformation campaigns were suspected of influencing U.S. voters.

While the goal was to counter malign influence, the methods increasingly mirrored the architecture of influence itself: emotion tracking, content suppression, behavioral modeling, and exposure engineering.

The Precedent Set for Future Administrations

The legal and institutional changes made under the Obama administration were framed as defensive—tools to fight terrorism, prevent radicalization, and protect digital infrastructure. But in practice, they opened the door for the normalization of domestic influence operations, many of which could now be directed at Americans in ways that would have been illegal just a decade earlier.

By removing the legal firewall between foreign and domestic information operations, expanding CVE as a model for preemptive belief intervention, and forging deep tech partnerships without transparency, the administration created a toolkit that could be—and eventually was—retooled for political warfare, election narrative management, and pandemic information control.

These mechanisms would later be exploited and expanded by both private actors and state-adjacent influence operations in the Trump and Biden years—demonstrating that once built, such tools rarely remain constrained to their original mission.

8. Synthetic Reality Architectures and the Erosion of Shared Truth

How AI, Data Feedback Loops, and Private Influence Networks Are Reconstructing Reality Itself

The greatest threat to democratic society is no longer censorship, nor even surveillance in the classical sense. It is the strategic engineering of reality—the ability of AI-driven systems, private intelligence actors, and state-adjacent platforms to create immersive environments of perception so tailored, persistent, and self-reinforcing that the user becomes unable to meaningfully distinguish fact from fiction, consent from conditioning, or agency from exposure.

This is not a hypothetical future. It is an operational present.

Across political campaigns, social platforms, decentralized media ecosystems, and immersive entertainment technologies, a new form of informational dominance has emerged—one that doesn’t suppress information but simulates entire realities, calibrated to emotional resonance, ideological alignment, and behavioral compliance.

Where earlier sections described repetition-based influence, this section describes its terminal form: the enclosure of the human mind within synthetic architectures, where belief becomes inseparable from system exposure, and where truth is no longer a shared public resource, but a private, personalized simulation.

The Rise of Personalized Reality Constructs

The digital platforms that now serve as primary information sources for billions of people are not neutral mediums—they are environment-generating machines. Every scroll, click, share, or pause provides input to AI models that continuously adapt the information environment in real time.

The consequence is that no two individuals occupy the same narrative space. Each user is surrounded by:

Different facts

Different validators

Different emotional stimuli

Different representations of reality itself

This hyper-personalization turns the feed into a cognitive cell, locking the user into a custom perceptual world that feels organic but is engineered, not for truth, but for engagement. Over time, this creates epistemic solipsism—the belief that one’s informational universe is reflective of general reality, when in fact it has been algorithmically carved to exploit identity, reinforce emotion, and suppress dissonance.

What was once “bias” is now architecture. What was once “filter bubble” is now operationalized isolation. What was once “targeted messaging” is now synthetic environment construction.

AI and the Creation of Synthetic Social Consensus

An increasingly sophisticated layer of this architecture involves the simulation of social consensus through synthetic agents—AI-generated avatars, bots, influencers, and engagement networks that mimic human behavior and emotional response.

These systems are capable of:

Posting emotionally resonant comments at scale

Simulating likes, retweets, or upvotes to validate messages

Fabricating debates to guide user perception of social norms

Creating entirely fictitious political or ideological movements, complete with apparent mass support

To the human brain, consensus is evidence. When users see a message repeatedly affirmed, emotionally amplified, and socially endorsed, they internalize it as normatively valid—regardless of its origin.

Thus, synthetic agents—when embedded in feedback-optimized systems—don’t just influence opinion, they construct belief ecosystems that become indistinguishable from real human networks. And they do so at scale.

Immersion, Not Persuasion

The most advanced forms of digital influence do not try to change beliefs through argument. They immerse users in digital atmospheres designed to make certain worldviews feel inevitable.

Whether through:

TikTok recommendation loops

Discord community immersion

Deepfake-driven media ecosystems

“Dark pattern” newsfeed architectures

AI-powered “reality influencers” trained on user-specific anxieties and aspirations

…the goal is the same: to immerse the user in a narrative world so complete, so emotionally congruent, and so socially validated that resistance never arises.

In this state, the mind is not overcome by coercion, but by coherence. The world makes sense—and that is its most powerful deception.

The Erosion of Shared Truth

The aggregate consequence of synthetic reality architectures is the fragmentation of reality itself. No longer does society operate with shared epistemic ground. Instead:

Each ideological community lives in its own simulation

Each belief system has its own media, validators, and internal logic

Each user becomes a node in a reality silo, hermetically sealed by algorithmic feedback

At this point, traditional concepts like “fact-checking” or “debunking” become inert. They rely on a shared cognitive framework that no longer exists. A person immersed in a synthetic perceptual system cannot be argued with—they are not just wrong about facts; they are living in a different world.

And that world has been built for them.

Strategic Risks and National Security Implications

The strategic implications of synthetic realities are severe:

Adversarial actors can build belief systems inside target populations—complete with simulated consensus, synthetic leaders, and false oppositions.

Cognitive fractures can be engineered—not by suppressing truth, but by oversaturating the environment with engineered fictions that feel more real than facts.

Democratic functions like elections, legislation, and protest lose legitimacy when consensus about basic events no longer exists.

Radicalization becomes frictionless, because the simulation rewards emotional alignment with engineered incentives.

At scale, this becomes narrative warfare waged as immersive reality construction—far beyond traditional disinformation. This is informational terraforming.

Why Cognitive Sovereignty Must Now Include Environmental Integrity

In this new context, the concept of cognitive sovereignty must be updated. It is no longer sufficient to protect the individual’s right to free thought or speech. Sovereignty must now include the right to a reality not covertly constructed by adversarial or unaccountable systems.

This means:

Auditing the architecture of digital exposure, not just its content

Detecting synthetic actors, not just their outputs

Preserving friction, doubt, and disagreement as psychological immune functions

Building ethical infrastructure for exposure curation, where truth has structural support, not just rhetorical defenders

If the government does not protect the psychological environment of its citizens, someone else will control it—whether foreign states, private firms, or autonomous systems optimizing for goals no human set.

In the age of synthetic realities, a clear, safe thought may itself require governance. Not censorship. Not indoctrination. But protection from ambient manipulation architectures designed to erase doubt, override agency, and colonize belief.

9. A Framework for Cognitive Sovereignty in the Age of Digital Entrainment

Designing the Architecture to Defend Autonomy in a Reality Under Siege

As this paper has demonstrated, we are no longer debating whether truth is under threat. We are witnessing the systematic virtualization of reality itself, engineered by algorithmic infrastructures and amplified by behavioral data markets that prioritize manipulation over meaning.

The implications are no longer philosophical. They are geostrategic. The ability of a nation to govern itself now depends on its ability to protect the cognitive terrain of its citizens from adversarial simulations, synthetic consensus mechanisms, and automated entrainment environments.

Thus, we must treat cognitive sovereignty not as a metaphor—but as a matter of national survival.

Cognitive Sovereignty – A Working Definition

Cognitive sovereignty is the epistemological and strategic condition in which individuals and democratic societies maintain the capacity to perceive reality, form beliefs, and deliberate freely, without covert manipulation by automated systems, adversarial networks, or immersive synthetic environments.

It is the psychological analogue to territorial sovereignty and the precondition for meaningful democratic agency.

In the same way that the Westphalian model defined the right of states to resist external interference, cognitive sovereignty must define the right of human minds to resist informational colonization in the digital age.

The Five Pillars of National Cognitive Defense

To operationalize this doctrine, we propose a national strategy built on five interlocking pillars. These are drawn from precedent models in cybersecurity, critical infrastructure policy, and international information security frameworks. Each pillar includes strategic goals, institutional pathways, and comparative references.

Pillar I: Narrative Infrastructure Transparency

Strategic Objective: Demystify and declassify the algorithms that shape digital perception.

Mandate public algorithm audits for platforms whose recommender systems influence political or ideological exposure (see Diakopoulos, 2016).

Require narrative provenance tracing—digital footprints showing how content is ranked, targeted, and repeated across time.

Grant independent epistemic oversight to third-party research institutions (e.g., NSF-backed labs) empowered to evaluate algorithmic influence patterns.

International Reference: European Union’s Digital Services Act; Finland’s platform accountability pilots.

Scholarly Foundation: Pasquale (2015) on "The Black Box Society"; Noble (2018) on algorithmic bias.

Pillar II: Synthetic Actor Disclosure and Prohibition of Simulated Consensus

Strategic Objective: Eliminate undisclosed AI and bot-driven influence campaigns.

Require legal labeling of all non-human digital agents (avatars, chatbots, AI influencers) interacting with public discourse.

Treat the simulation of mass support (e.g., fake followers, synthetic movements) as a form of digital counterfeiting, akin to financial fraud.

Establish a publicly searchable Synthetic Agent Registry (SAR), with real-time tracking and deactivation protocols.

Comparative Model: Taiwan’s cyber transparency initiative; U.S. FARA (Foreign Agents Registration Act) analog for algorithmic agents.

Empirical Evidence: Shao et al. (2018); Ferrara et al. (2016) on bot-amplified polarization during the 2016 U.S. election.

Pillar III: Cognitive Exposure Hygiene and Psychological Risk Protocols

Strategic Objective: Develop exposure standards that treat psychological manipulation like environmental contamination.

Classify excessive repetition, emotional saturation, and algorithmic radicalization as risk vectors subject to regulatory mitigation.

Introduce cognitive “nutrition labels” on high-velocity, high-volume content clusters (e.g., TikTok, YouTube Shorts).

Commission the National Institutes of Mental Health (NIMH) and CDC to define "cognitive toxicity thresholds" for digital environments.

Scientific Basis: Sapolsky (2017) on stress and repetition; Zuboff (2019) on behavioral futures markets; Sunstein (2017) on cascade effects.

Policy Analog: FDA dietary standards; WHO guidelines on light and noise exposure.

Pillar IV: National Cognitive Immunology and Resilience Education

Strategic Objective: Arm citizens with the tools to defend their own cognition.

Launch a National Epistemic Hygiene Curriculum, modeled after Finland’s cognitive resilience programs (praised by NATO StratCom).

Include modules in emotional regulation, exposure pattern awareness, disinformation forensics, and synthetic environment detection.

Develop certification for public servants, educators, and media professionals in narrative integrity analysis.

Scholarly Foundation: Kahneman (2011), Tversky, Gigerenzer on decision-making under uncertainty; Wardle & Derakhshan (2017) on misinformation ecosystems.

Pillar V: Civic Algorithmic Governance and Epistemic Infrastructure Development

Strategic Objective: Replace surveillance capitalism with ethical, public-interest algorithms.

Fund the creation of open-source, non-profit recommendation systems for public media platforms.

Establish federal standards for algorithmic ethics in environments that mediate civic knowledge (e.g., news, education, voting).

Treat exposure algorithms as public utilities—regulated not for revenue, but for epistemic health and pluralism.

Comparative Reference: Taiwan’s vTaiwan model; civic tech ecosystems in Estonia and Barcelona.

Theoretical Basis: McLuhan on media shaping cognition; Benkler et al. (2018) on epistemic fragmentation in networked democracies.

Toward a Cognitive Sovereignty Index

Just as nations track GDP or cybersecurity readiness, the U.S. must develop a Cognitive Sovereignty Index (CSI)—a quantitative and qualitative framework for assessing:

Exposure Entropy: How ideologically diverse are user feeds?

Synthetic Influence Risk: What percent of content comes from non-human actors?

Trust Thermography: How fragmented are institutional trust patterns?

Narrative Coherence Health: Is there a shared baseline of verifiable facts?

This index can guide policymaking, inform platform regulation, and benchmark national resilience.

Conclusion: The Mind as Infrastructure, Perception as Sovereignty

We are entering a reality where the mind is not only targeted, but increasingly constructed—where exposure, repetition, and affective curation converge to pre-shape belief before deliberation can begin.

This is not theoretical. It is operational.

Without a doctrine of cognitive sovereignty, democratic societies will not merely lose their consensus. They will lose the conditions under which consensus can even emerge.

“As the 20th century built nuclear containment doctrines to manage existential physical risks, the 21st must build cognitive containment doctrines to manage existential epistemic threats.”

If we fail to act, we cede our agency not to tyrants—but to code. Not to armies—but to architectures. Not to ideology—but to infrastructure.

The defense of cognition is the defense of liberty itself.

10. Conclusions and Strategic Recommendations

This Is Not About Information. It Is About Reality.

The age of information manipulation is over. What we are now witnessing is the systematic replacement of reality itself—engineered at scale, driven by algorithms, and imperceptibly tailored to every human subject connected to a network.

This white paper has traced the path from analog psychic driving experiments under MKULTRA to today’s digitally immersive, algorithmically curated, and behaviorally entraining architectures of influence. These are no longer theoretical systems. They are operational, global, and increasingly autonomous.

They do not seek to change what people think. They seek to determine what people are allowed to perceive.

The Collapse of Cognitive Sovereignty

What was once the sovereign territory of the human mind is now contested infrastructure. The ability to hold a thought, to form a belief, to arrive at an understanding unshaped by invisible systems—this is no longer a guaranteed human freedom. It is now a privilege governed by exposure architecture.

The distinction between truth and narrative has been erased. Exposure is mistaken for knowledge. Consensus is fabricated by machines. Emotional saturation replaces empirical understanding.

This is not just the erosion of fact. It is the engineering of perception. And it will not stop on its own.

Without intervention, the very notion of individual autonomy, political agency, and democratic participation becomes functionally obsolete. Citizens will be unable to think outside the systems that design their perception.

Strategic Threat Vectors

AI-driven content environments now deploy modified forms of psychic driving—repetition, behavioral nudging, emotional depatterning—at a scale far beyond what Ewen Cameron or MKULTRA architects could imagine.

Private intelligence firms and influence contractors operate beyond the bounds of national oversight, often working across elections, protests, and media ecosystems to simulate movements, seed chaos, or construct belief.

Post-legal frameworks, particularly reforms under the Obama administration, created the legal gray space in which domestic narrative control could be quietly deployed under the language of resilience, counter-extremism, or public safety.

Synthetic realities, populated by AI-generated peers, emotionally resonant content loops, and deepfake consensus engines, now pose an existential threat to epistemic stability and national cohesion.

The Choice Before Us

We are no longer debating whether citizens should be shielded from “fake news.” We are now deciding whether human cognition itself should remain a free, self-governing system—or whether it will be subsumed by immersive simulation environments optimized for control, not understanding.

There is no neutral position. There is no safe indecision.

To delay is to concede.

To wait is to lose the initiative to adversarial actors—whether foreign intelligence networks, rogue AI ecosystems, or domestic contractors incentivized to destabilize democratic institutions for profit.

Strategic Recommendations

1. Declare Cognitive Sovereignty as National Security Infrastructure

Human mental autonomy must be defined and defended like borders, water, or energy. It is the infrastructure upon which all other freedoms depend.

2. Establish a Civilian Cognitive Defense Command

This should be an independent entity capable of monitoring large-scale influence environments, auditing immersive simulations, detecting adversarial narrative engines, and issuing public exposure alerts in real time.

3. Mandate Algorithmic Transparency and Exposure Provenance

Platforms must be required to provide users with detailed metadata on content targeting, emotional profiling, exposure patterning, and synthetic consensus mechanics. If a belief was engineered, it should be traceable.

4. Criminalize Undisclosed Synthetic Narrative Operations

The use of bots, AI-generated personas, or covert reality simulation tactics to influence democratic populations without consent must be treated as a form of cognitive sabotage, with legal and financial consequences.

5. Launch a National Cognitive Immunology Initiative

Teach every citizen the fundamentals of cognitive defense:

How to recognize manipulation patterns

How to detect emotional entrainment

How to resist consensus simulation

How to differentiate informational density from reality coherence

6. Create Public Narrative Platforms with Ethical Recommendation Engines

The information commons cannot be governed by opaque, profit-optimized engagement loops. We must design civic spaces where the algorithm itself is audited, ethical, and in service of human epistemic development—not corporate dopamine extraction.

The Moral Imperative of Now

If we fail to act, we are not simply ceding influence. We are allowing the systematic extinction of reality literacy.

We are permitting the enclosure of the human imagination within customized perceptual prisons, with no bars, no guards, and no escape—only infinite comfort, emotional alignment, and psychological compromise.

This is not a future threat. It is already here. It is live, active, and evolving.

We must reclaim the architecture of belief. We must defend the possibility of truth. We must make cognitive sovereignty the cornerstone of 21st-century democratic survival.

Because once reality itself can be rewritten, there will be no “later” to recover it.

The defense of perception is the defense of civilization. There is nothing more urgent.